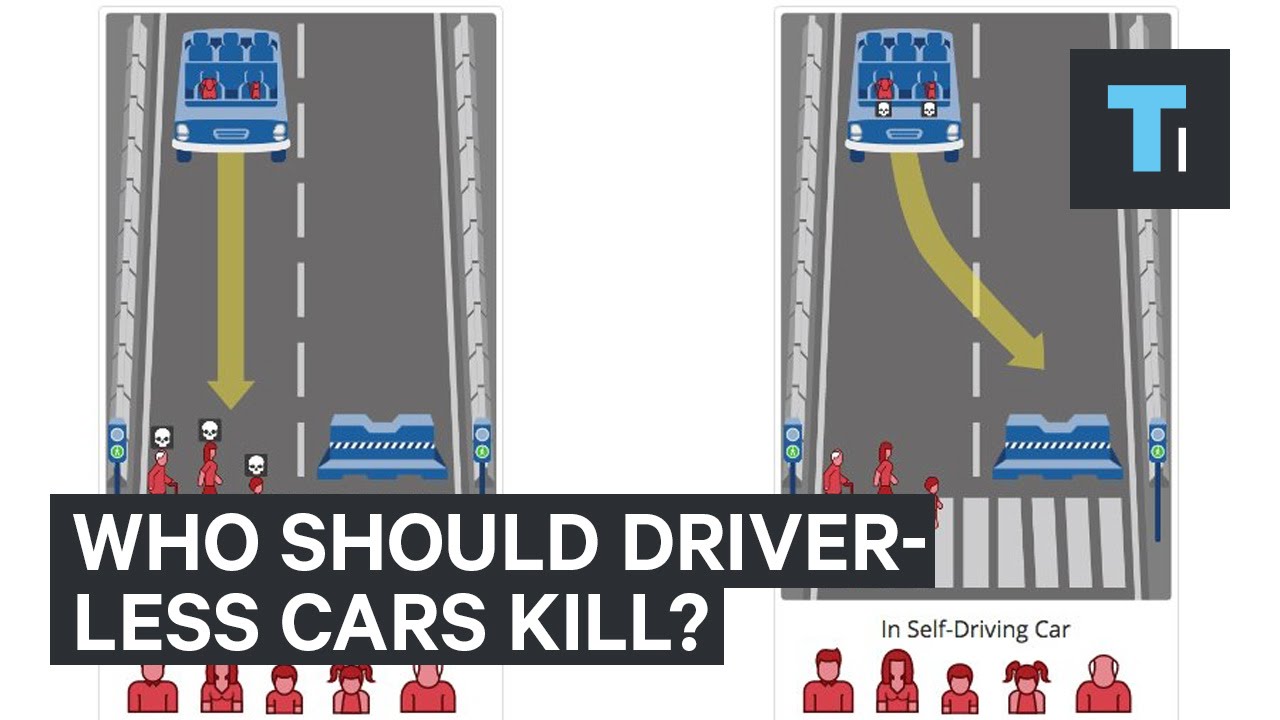

As more and more companies experiment with self-driving cars, new questions arise about how the vehicles should respond in certain situations. If someone runs into the middle of the street, should it swerve and kill the driver or keep going and kill the pedestrian? These are some difficult moral dilemmas, and so researchers at MIT have created a website called “Moral Machine” to let humans decide what the cars should do in various scenarios.